SchNet#

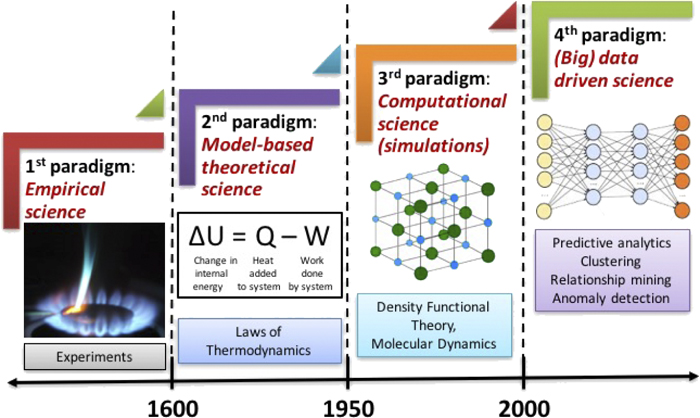

1. Background#

CNN is dealing with grids such as image, videos. While atom (cartesian coordinates) are continuous. Such discretizing space loses precise geometric information.

Previous model only predict energy, forces derived from those models are not conserved (anti-physical)

2. Introduction#

All of the computation is applied to stable systems which means equilibrium conditions. i.e., local minima of the potential energy

surface \(E(r_1,...,r_n)\) where \(r_i\) is the position of atom \(i\)

But it’s not clear how obtain an equilibrium state without optimize the position of atoms. Therefore, we need to compute both the total energy \(E(r_1,...,r_n)\) and the forces acting on the atoms.

In order to learn the equilibrium state, the model should learn a representation for molecules using equilibrium and non-equilibrium conformations.

Invariance of the molecular energy with respect to rotation, translation and atom indexing.

2.1. Continuous-filter convolutions#

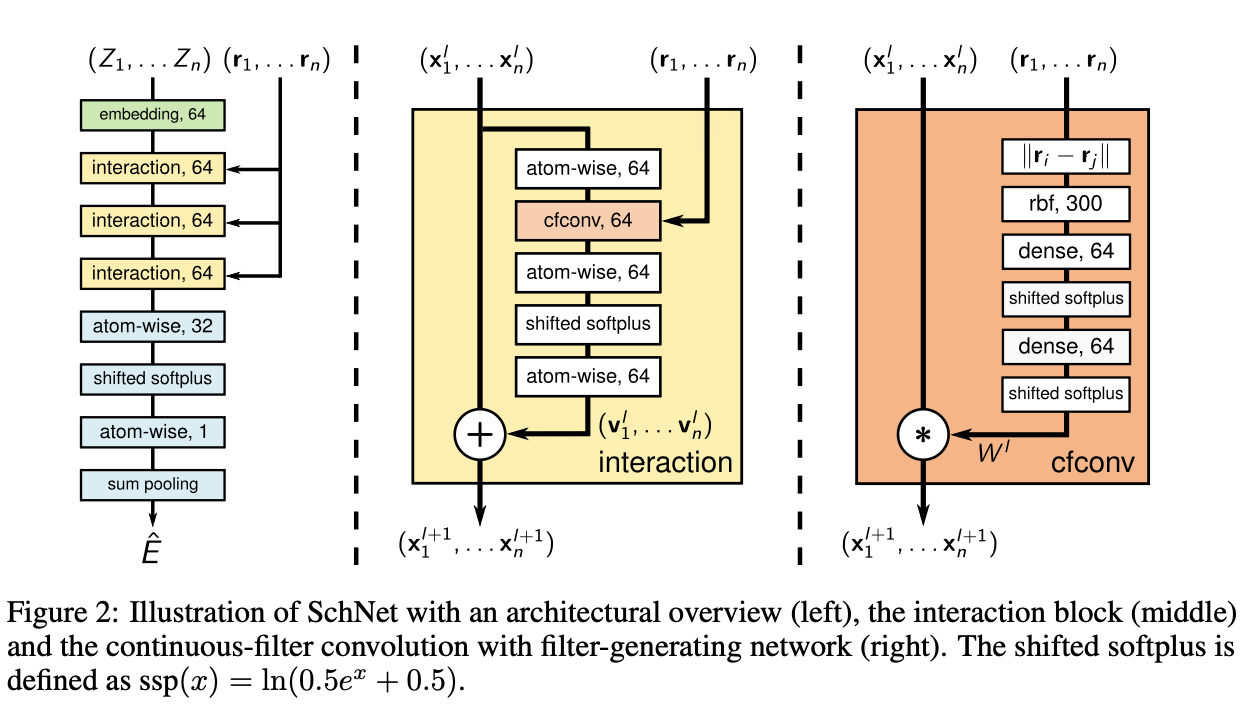

Given the feature representations of \(n\) objects \(X^l = (x^l_1,...,x^l_n)\) with \(x^l_i\in\mathbb{R}^F\) at locations \(R=(r_1,...,r_n)\) with \(r_i \in \mathbb{R}^D\) , the continuous-filter convolutional layer \(l\) requires a filter-generating function

Let us first compare the difference of conventional CNN and their “continuous-filter convolution”:

Conventional CNN: \((x*w)(t)= \int x(a)w(t-a)da\)

Continuous-filter convolution: \((x^l*w^l) = \sum x_j^I\circ w^l(r_i-r_j)\), \(\circ\) is the element wise production.

Instead of just relying on the initial randomized weight of the filter which is implemented in common CNN (such Radom weight behaves like different grid scan strategies.) The SchNet method use atomic coordinates to get the weight of convolution \(W^l : \mathbb{R}^D\rightarrow \mathbb{R}^F\), which maps from a position to the corresponding filter values.

Where: \(x_i^l \in \mathbb{R}^F\) is the feature representation of n objects at locations \(R=(r_1,...,r_n)\) with \(r_i\in\mathbb{R}^D\)

2.2. Architecture#

**Molecular representation **

A molecule in a certain conformation can be described uniquely by a set of n atoms with nuclear charges \(Z = (Z_1,...,Z_n)\) and atomic positions \(R= (r_1,...r_n)\).

With the atomic number Z, we can use embedding to get the feature \(X^0\) at the first layer. \(a_{Z_i}\) is initially randomized type embeddings.

Dense layers, which is responsible for recombination of features

This interaction block is responsible for updating the information of geometric information \(R=(r_1,...,r_n)\) and the feature maps

In order to satisfy the requirements for modeling molecular energies, they restrict their filters for the cfconv layers to be rotationally invariant. The rotational invariance is obtained by using interatomic distances as input for the filter network.

[!NOTE]

Note there is a trick there, my understanding is that:

Since according to the harmonic characteristics of the bond. Energy has global minimum at a critical value \(d_0\), e.g. attract force at \(d>d_0\), repulsive at \(d<d_0\). By this since, just imagine that \(d_{ij}\) will be quite small because many d will be quite similar. Also, another effect by initialization of weight of dense layer will be quite small. Thus, \(wd+b\) is close to 0 at initial training stage. The following activation function will treat it as linear. That’s when the problem comes out. For \(d \pm \nabla d\), it optimize to be linear (larger/smaller weight with respect to longer distance.) But the real situation should be concave. This leads to a plateau at the beginning of training that is hard to overcome.

The they avoid this by expanding the distance with radial basis functions:

3. Training with energies and forces#

Recall their goal. One is predict the energy, another is preserve the force conservation.